Enhancing LLM Inference on Mid-Range GPUs through Parallelization and Memory Optimization

I. Introduction

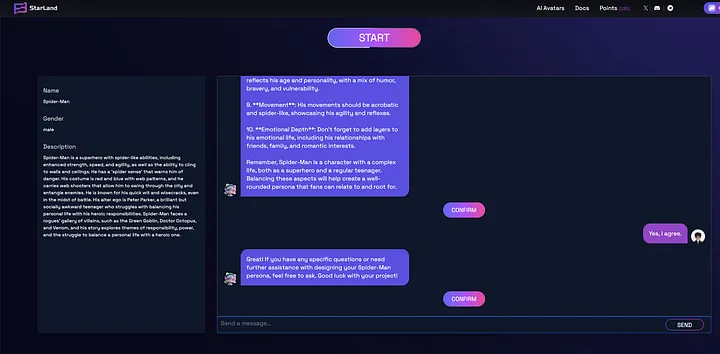

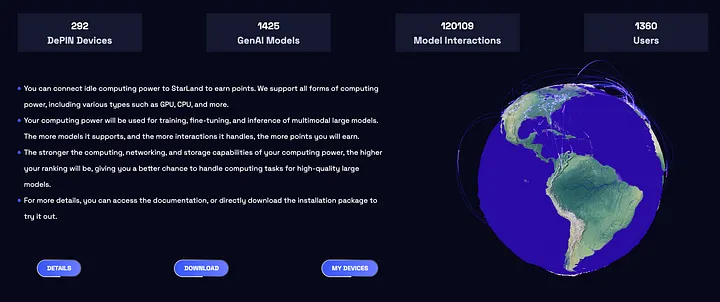

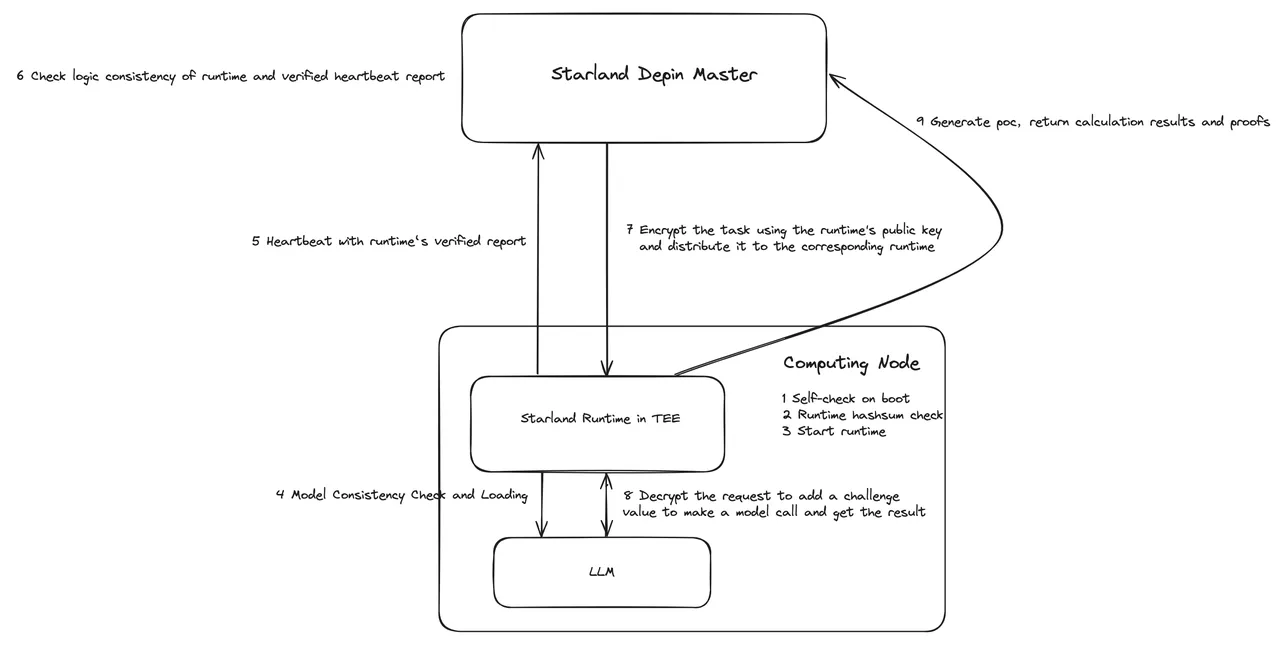

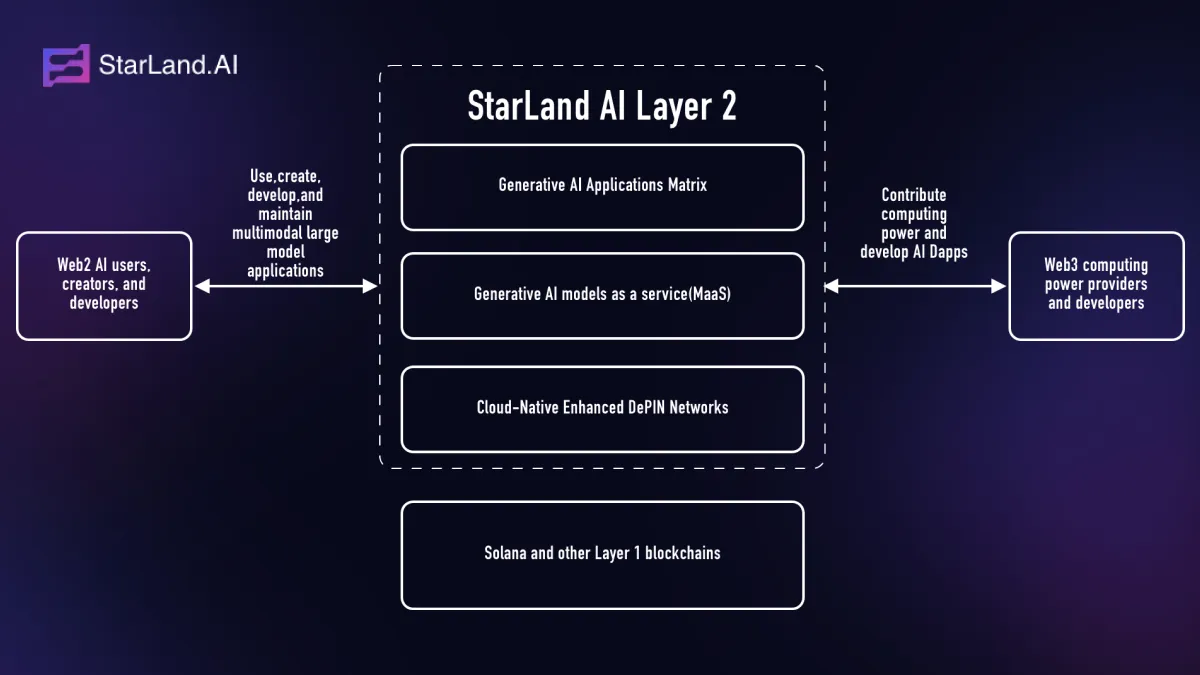

Large Language Models (LLMs) have revolutionized the field of natural language processing, offering unprecedented capabilities in understanding and generation. However, the computational intensity of LLMs poses significant challenges when deploying these models on mid-range GPUs, which are common in many practical applications. The primary obstacles are the substantial memory requirements and the need for high throughput to maintain interactive response times. In this article, we delve into the theoretical underpinnings of three main strategies that we have adopted on StarLand to optimize LLM inference on such hardware: Model Parallelism, Pipeline Parallelism, and Tensor Parallelism. Additionally, we explore advanced memory management techniques that leverage concepts from virtual memory management in operating systems on StarLand. Our goal is to provide a depth of technical insight into how these strategies can be effectively employed to enhance LLM inference on mid-range GPUs.

II. Background

A. LLM Inference and GPU Limitations

LLMs, such as the Transformer architecture, consist of multiple layers that process input sequences to generate outputs or predictions. The inference process is memory-intensive, as it requires the storage of a complete set of model parameters and intermediate activation states. For mid-range GPUs with limited memory, this poses a significant challenge. The memory capacity of these GPUs restricts the size of the LLM that can be deployed and the batch size that can be processed simultaneously, leading to underutilization of computational resources and increased latency.

B. Parallelization Concepts for LLMs

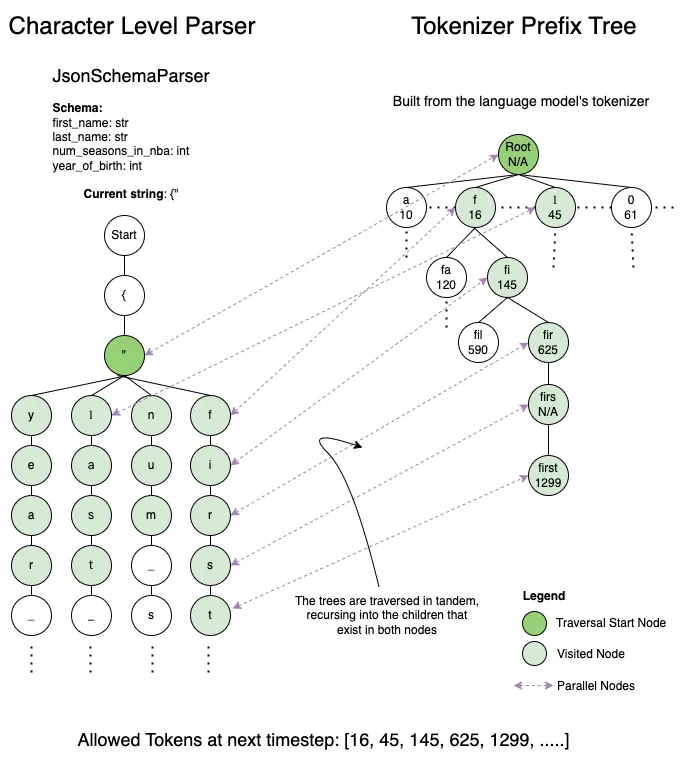

To overcome the limitations of mid-range GPUs, StarLand employs parallelization techniques to distribute the computational load and optimize resource usage. We focus on three parallelization strategies:

-

Model Parallelism: This involves partitioning the model's layers across multiple GPUs. Each GPU processes a subset of the layers, and the outputs are aggregated to form the final prediction. The challenge lies in minimizing inter-GPU communication overhead while maintaining load balance.

-

Pipeline Parallelism: In this approach, multiple instances of the model or different stages of the inference pipeline are executed concurrently on the same GPU. This requires careful scheduling to maximize GPU utilization and reduce idle time between stages, a strategy effectively utilized in StarLand.

-

Tensor Parallelism: This strategy focuses on distributing the tensor operations themselves across multiple GPUs. By dividing the tensors into smaller chunks, each GPU processes a portion of the data, leading to a reduction in the memory footprint and potentially faster processing times, as implemented in StarLand.

C. Memory Management Techniques

Effective memory management is crucial for LLM inference on mid-range GPUs. We adopt techniques inspired by virtual memory management:

-

Dynamic Memory Allocation: By allocating memory for the key-value cache (KV cache) dynamically, we can better match the memory usage to the actual length of the input sequences, thus reducing waste.

-

Paged Memory Management: Similar to paging in operating systems, we divide the KV cache into fixed-size blocks and manage these blocks as pages. This allows for more efficient memory utilization and the ability to share memory between different inference tasks.

-

Copy-on-Write Mechanism: To avoid unnecessary memory duplication, we implement a copy-on-write mechanism that creates a new copy of a memory block only when it is modified, thus conserving memory resources.

The effectiveness of these strategies is underpinned by their ability to reduce memory fragmentation and enable efficient sharing of memory resources. We will explore these concepts in greater detail in the subsequent sections, providing mathematical formulations where appropriate to illustrate the principles and their implications on system performance.

III. Parallelization Techniques for LLM Inference

A. Model Parallelism

Model parallelism involves distributing the layers of an LLM across multiple GPUs. Consider an LLM with 'L' layers to be distributed over 'G' GPUs. Each GPU is assigned a subset of layers, with the number of layers per GPU being . The challenge is to minimize the communication overhead while maintaining computational balance.

Let represent the computational complexity of layer 'i' and the memory requirement. The goal is to find an allocation where is the set of layers assigned to GPU 'g', such that the total communication overhead is minimized and the memory requirements are balanced:

Here, is the maximum memory available per GPU, and the second constraint ensures that the computational load is evenly distributed.

B. Pipeline Parallelism

Pipeline parallelism processes multiple instances of the model concurrently. If 'P' instances are processed in parallel, with each instance going through 'S' stages, the throughput can be increased:

The total time per instance is affected by the stage with the maximum latency . To maximize throughput, the system must pipeline stages efficiently and balance the load across stages.

C. Tensor Parallelism

Tensor parallelism partitions the input tensors across GPUs. Given a tensor of size to be split across 'G' GPUs, each GPU receives a sub-tensor of size . The key is to choose an optimal splitting ratio that minimizes the communication overhead while maximizing computational efficiency.

Assuming is a tensor representing input data for an LLM, the split tensor can be computed as:

Where must be chosen such that the parallel computation of across GPUs minimizes the overall execution time , which includes both computation and communication costs:

Here, is the computation time for tensor on GPU 'g', and is the communication overhead that depends on the split ratio and the number of GPUs .

These parallelization techniques, when combined with advanced memory management strategies, can significantly enhance the inference capabilities of LLMs on mid-range GPUs. The mathematical formulations provided offer a glimpse into the complexity of optimizing these systems, taking into account both computational and communication costs to achieve the best performance.

IV. Memory Management Strategies

Effective memory management is a cornerstone for efficient LLM inference on mid-range GPUs. The strategies outlined below are inspired by principles from operating systems and are tailored to address the unique challenges posed by LLMs.

A. Dynamic Memory Allocation

Dynamic memory allocation is essential for handling variable-length input sequences common in LLM inference, a challenge effectively addressed in StarLand. Instead of allocating a fixed, maximum-sized block of memory for each sequence, we allocate memory based on the actual sequence length. This approach significantly reduces memory waste due to over-provisioning.

Let be the length of the input sequence, the memory required for a sequence of length , and the maximum memory block size. The memory allocation for a sequence of length is given by:

This ensures that memory allocation is proportional to the sequence length, preventing unnecessary memory usage.

B. Paged Memory Management

Paged memory management, analogous to virtual memory in operating systems, involves dividing the memory into fixed-size pages. This approach allows for efficient memory utilization and the ability to share memory between different inference tasks, as achieved in StarLand.

For a KV cache requiring pages, and each page being of size , the memory manager maintains a page table that maps logical pages to physical pages. The memory manager's efficiency is characterized by its ability to minimize page faults and maximize page reuse.

C. Copy-on-Write Mechanism

The copy-on-write (COW) mechanism is a memory optimization technique that comes into play during the inference process when multiple sequences share common prefixes. Instead of duplicating the entire memory block when a write operation is required, COW defers the copy until the actual modification occurs.

Given a memory block shared by sequences, the COW mechanism ensures that only the modified portion of is copied. The memory saving can be expressed as:

This formula captures the memory saving achieved by deferring the copy operation until it is necessary.

D. Swapping and Recomputation

Swapping and recomputation are two strategies to handle memory eviction when the GPU memory is fully utilized.

-

Swapping involves moving less frequently accessed data to a slower, auxiliary memory (such as system RAM or SSD). When the data is needed again, it is swapped back into the GPU memory. The swap operation is modeled as:

-

Recomputation is an alternative to swapping that involves recalculating the evicted data when it is required. This is particularly useful for data that can be recomputed from other available data without loss of information. The recomputation overhead is given by:

The decision to swap or recompute is based on the relative costs and the current memory state.

By integrating these memory management strategies, we can significantly enhance the inference capabilities of LLMs on mid-range GPUs, allowing them to handle larger models and increased throughput with limited memory resources.

V. Theoretical Analysis and Performance

The theoretical analysis of parallelization and memory management strategies is crucial for understanding their impact on LLM inference performance. This section delves into the mathematical modeling and analysis of the strategies discussed earlier, providing insights into their efficiency and potential benefits.

A. Performance Limits of Parallelized LLM Inference

The performance of parallelized LLM inference is bounded by the slowest component in the pipeline, often referred to as the "critical path." The critical path is influenced by the parallelization strategy employed. For instance, in model parallelism, the critical path is determined by the maximum latency across all parallelized layers.

Let be the time taken to process layer in parallel, and be the maximum of for all layers. The throughput of the parallelized system is given by:

In an ideal scenario with no communication overhead, the throughput would be inversely proportional to the latency of the slowest layer. However, in practice, communication overhead must be considered, leading to an effective throughput :

B. Optimal Parallelization Strategies

Optimizing parallelization strategies involves finding a balance between computational load and communication overhead. The optimal strategy minimizes the total execution time , which includes both computation and communication times:

The computation time can be estimated as the sum of the processing times for all layers or operations. The communication time is influenced by the size of the data being communicated and the bandwidth of the interconnect between GPUs.

C. Performance Trade-offs in LLM Deployment

There are trade-offs to consider when deploying LLMs on mid-range GPUs. For instance, increasing the parallelism level can lead to higher throughput but may also increase communication overhead. The efficiency of memory management techniques also has a trade-off curve with the complexity of the inference task.

The trade-off can be quantified by analyzing the speedup gained from parallelization, which is the ratio of the serial execution time to the parallel execution time :

Ideally, for 'G' GPUs, a linear speedup is expected:

However, due to overheads, the actual speedup is often less than the ideal speedup. The efficiency of the parallelization can be calculated as:

D. Performance Evaluation Metrics

To evaluate the performance of the parallelization and memory management strategies, we consider the following metrics:

-

Throughput: Measured in inferences per second, it quantifies the number of inference tasks processed in a given time frame.

-

Latency: The time taken to complete a single inference task from input to output.

-

Memory Efficiency: The ratio of useful work to total memory usage, reflecting how effectively memory resources are utilized.

-

Speedup: The factor by which the parallel execution is faster than the serial execution.

-

Efficiency: The average speedup per GPU, indicating how well the parallelization strategy utilizes available resources.

By analyzing these metrics, we can draw conclusions on the effectiveness of our parallelization and memory management techniques, providing a theoretical foundation for their practical implementation and optimization on mid-range GPUs.

VI. Conclusion

In this article, we have explored the theoretical foundations and practical implications of parallelization techniques and memory management strategies for deploying Large Language Models (LLMs) on mid-range GPUs. The goal has been to enhance LLM inference capabilities without requiring high-end, specialized hardware.

A. Summary of Key Findings

-

Model Parallelism allows us to distribute the layers of an LLM across multiple GPUs, which can potentially increase throughput and reduce latency, provided that the communication overhead is minimized.

-

Pipeline Parallelism enables the concurrent processing of multiple instances or stages of an LLM, which can lead to higher throughput. However, it requires careful scheduling to ensure that no stage becomes a bottleneck.

-

Tensor Parallelism involves partitioning the input tensors across GPUs, which can reduce the memory footprint of each GPU and potentially speed up computation.

-

Dynamic Memory Allocation and Paged Memory Management are strategies that help to optimize memory usage for variable-length input sequences, reducing memory waste and improving efficiency.

-

Copy-on-Write Mechanism and Swapping and Recomputation are techniques that help manage memory evictions efficiently, allowing for better memory utilization and performance.

B. Prospects for LLM Inference on Mid-Range GPUs

The strategies discussed in this article open up possibilities for LLM deployment on a wider range of hardware, as demonstrated by the successful implementation on StarLand. As LLMs continue to grow in size and complexity, the need for efficient inference on mid-range GPUs becomes increasingly important. The theoretical analysis provided here serves as a roadmap for future research and development in our StarLand.

C. Implications for Mid-Range GPU Deployment

The findings of this article have implications for developers and organizations looking to deploy LLMs in resource-constrained environments. By understanding the trade-offs and leveraging the strategies outlined, it is possible to achieve high-performance LLM inference on mid-range GPUs,a goal that StarLand aims to accomplish.

D. Future Directions

Looking ahead, there are several promising directions for future work:

-

Algorithm Optimization: Further optimization of parallelization algorithms to better handle the unique challenges of LLMs.

-

Hardware-Software Co-Design: Designing GPU hardware with features that are tailored to the needs of LLM inference.

-

Adaptive Strategies: Developing adaptive parallelization and memory management techniques that can respond to changing inference workloads in real-time.

-

Energy Efficiency: Exploring methods to reduce the energy consumption of LLM inference on mid-range GPUs, which is important for sustainability.

-

Open-Source Implementations: Encouraging the development of open-source frameworks that implement these strategies to facilitate wider adoption.

By pursuing these directions, we can continue to push the boundaries of what is possible with LLM inference on mid-range GPUs, making advanced natural language processing capabilities more accessible to a broader range of users and applications.

Appendix:

A. Proofs for Parallelization Strategies

This appendix provides a detailed mathematical analysis of the parallelization strategies discussed in the main text. We will delve into the theoretical underpinnings of Model Parallelism, Pipeline Parallelism, and Tensor Parallelism, providing proofs for their efficacy under certain conditions.

Model Parallelism

Model parallelism involves executing different parts of a model on separate GPUs. The goal is to balance the computational load and minimize inter-GPU communication.

Proof of Load Balance: Let be the total number of layers in an LLM, and be the number of GPUs available. When using model parallelism, the layers are distributed such that each GPU gets layers. The load balance can be mathematically expressed as:

Where is the computational complexity of layer , and is a small constant representing the allowable imbalance.

Pipeline Parallelism

Pipeline parallelism processes multiple instances of the model simultaneously, with each instance going through different stages of the pipeline.

Proof of Increased Throughput: Consider parallel instances of an LLM, each with stages. The throughput is given by:

Assuming that the stages are perfectly balanced, the total time per instance is the time of the longest stage. If we denote the time taken by the longest stage as , the throughput can be simplified to:

This shows that the throughput is directly proportional to the number of parallel instances and stages.

Tensor Parallelism

Tensor parallelism involves splitting the input tensors across multiple GPUs, reducing the memory footprint on each GPU.

Proof of Memory Reduction: Let be a tensor of size that needs to be processed by an LLM. When split across GPUs using tensor parallelism, each GPU processes a sub-tensor of size . The total memory required before and after splitting is:

Despite the total memory remaining the same, the memory footprint on each individual GPU is reduced, which can be critical when dealing with memory constraints.

Analysis of Communication Overhead

In all parallelization strategies, communication overhead is a critical factor that can affect the overall performance.

Proof of Communication Overhead in Model Parallelism: Let be the communication overhead per layer when using model parallelism. The total communication overhead for a model with layers is:

This overhead must be minimized for efficient parallel execution. Techniques such as gradient aggregation, where gradients from different GPUs are combined before communication, can help reduce this overhead.

Conclusion

The proofs provided in this appendix serve to illustrate the theoretical basis for the parallelization strategies discussed. They highlight the importance of balancing computational load, minimizing communication overhead, and effectively managing memory in the deployment of LLMs on mid-range GPUs. These principles are fundamental in the design of efficient and scalable LLM inference systems.

B. Memory Management Algorithms

This appendix outlines the algorithms and data structures used for memory management in the context of LLM inference on mid-range GPUs. We focus on the key techniques discussed in the main text: dynamic memory allocation, paged memory management, and the copy-on-write mechanism.

Dynamic Memory Allocation Algorithm

Dynamic memory allocation is crucial for handling variable-length sequences in LLMs. The algorithm allocates memory based on the actual sequence length rather than a fixed maximum size.

Algorithm: DynamicMemoryAllocation

Input: SequenceLength L, MaximumMemoryBlock B, MemoryAllocator A

Output: AllocatedMemory M

1. M ← A.Allocate(Min(L * MemoryPerToken, B))

2. if M is NULL then

3. M ← A.Allocate(B) // Attempt to allocate maximum block if minimum fails

4. if M is NULL then

5. A.FreeAll() // Free all memory and retry allocation

6. M ← A.Allocate(Min(L * MemoryPerToken, B))

7. return M

Paged Memory Management

Paged memory management involves dividing the memory into fixed-size pages and managing these pages to optimize usage.

Algorithm: PagedMemoryManagement

Input: MemoryRequest R, PageTable T, PageSize S

Output: MemoryBlock B

1. B ← T.Lookup(R)

2. if B is NULL then

3. B ← AllocateNewPage(S)

4. T.Insert(R, B)

5. return B

Function AllocateNewPage(PageSize S)

1. if NoFreePagesAvailable() then

2. CoalesceFreePages() // Merge adjacent free pages

3. if NoFreePagesAvailable() then

4. return NULL // No free pages available

5. page ← GetFreePage(S)

6. return page

Copy-on-Write Mechanism

The copy-on-write (COW) mechanism defers the duplication of memory until a write operation occurs.

Algorithm: CopyOnWrite

Input: MemoryBlock B to be modified, ReferenceCount C for B

Output: Modified MemoryBlock B'

1. if C > 1 then // Check if B is shared

2. B' ← A.Allocate(SameSizeAs(B))

3. Copy(B, B') // Duplicate the contents of B to B'

4. C ← C - 1 // Decrement the reference count of the old block

6. return B' // Return the new block B'

Swapping Mechanism

Swapping involves moving data between the GPU memory and a slower, auxiliary memory to free up space in the GPU memory.

Algorithm: SwappingMechanism

Input: MemoryBlock B to be swapped out, AuxiliaryMemory M

Output: SwappedOut MemoryBlock B

1. if GPUMemoryFull() then

2. B ← SelectVictimBlock() // Choose a block to swap out

3. Write(B, M) // Write the contents of B to auxiliary memory M

4. GPU.Free(B) // Free the GPU memory occupied by B

5. return B

Recomputation Mechanism

Recomputation is an alternative to swapping where data is recalculated instead of being stored in memory.

Algorithm: RecomputationMechanism

Input: ComputationDependencies D, RecomputationFunction R

Output: Recomputed Data B

1. if GPUMemoryFull() then

2. foreach Dependency ∈ D do

3. if NotInGPUMemory(Dependency) then

4. Dependency ← R(Dependency) // Recompute the dependency

5. return R(B) // Recompute the data B using its dependencies

These algorithms are central to the efficient management of memory resources during LLM inference on mid-range GPUs. They provide a foundation for the development of more sophisticated memory management systems tailored to the needs of LLMs.