DePIN

AI computing spans server capabilities like H100, A100, and consumer-grade power such as 4090, 3090, 3080, integrated graphics, and CPUs, making unified management challenging. Running large models efficiently on low-end compute resources such as 3090, integrated graphics, and CPUs is highly challenging, leading to idle resources.

StarLandAI's DePIN network can make barriers lower for AI developers, bypassing concerns about computing power and multimodal model complexity. Seamlessly brings AI creators into the Web3 world, ensuring they gain fairer profits, and also Enables large models on low-end compute, increasing earnings and opportunities for compute providers.

Supports DePIN devices in running multimodal large models. Supporting DePIN devices in running multimodal large models enables various devices, such as PCs, smartphones, Internet of Things (IoT) devices, edge computing nodes, and others, to execute complex models that involve multiple modalities, such as text, images, and audio.

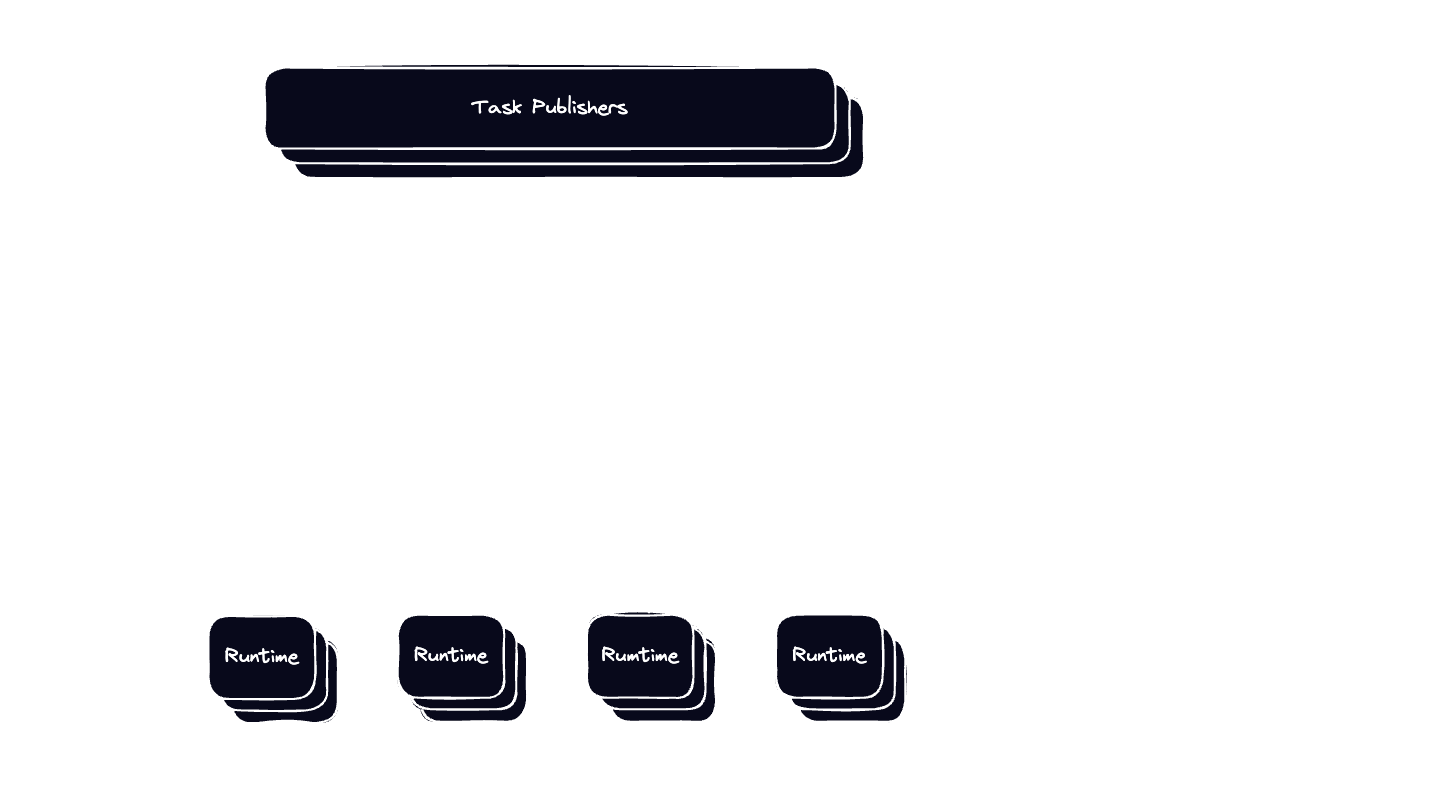

DePIN supports the aggregation and coordination of massive heterogeneous computational resources, integrating diverse computing power from various sources to ensure a robust and versatile infrastructure capable of handling the most complex AI models and algorithms. With our advanced computational power scheduling capabilities, we efficiently allocate and manage computational tasks, optimizing performance and reducing bottlenecks. This dynamic computational power allocation not only maximizes resource utilization but also ensures scalability to adapt to the fluctuating demands of AI computing needs.